By now, we all know that AI is quietly running the chatbots that answer attendee questions, the summarizers that help executives catch up on keynote sessions, and the personalization engines that suggest which booth or breakout to visit next. These tools make experiences smoother, but they also introduce a new set of rules under the EU AI Act, the world’s first comprehensive AI regulation.

If you’re leading events, sponsorships, or a digital product, compliance is about more than checking a legal box. It’s about building and keeping trust with attendees and sponsors. And starting August 2, 2025, the Act’s first obligations for general-purpose AI (GPAI) kicked in.

TLDR: AI Overview for Event Teams

- The EU AI Act compliance deadline for GPAI kicks in on August 2, 2025.

- Event marketing AI tools like chatbots, summarizers, and personalization engines must disclose when AI is being used.

- Vendor selection is critical; use a lightweight AI compliance checklist.

- 2026 brings stricter rules for high-risk AI, especially profiling and personalization.

- Compliance is directly tied to sponsor trust and attendee engagement.

Why the EU AI Act matters to event teams

The EU AI Act is designed to create clear rules for how AI is built, used, and communicated. According to the European Parliament, it introduces transparency obligations for general-purpose models and stricter requirements for high-risk systems, with a phased rollout starting in 2025.

For event leaders, this translates into three big areas:

- Transparency: Attendees need to know when they are interacting with AI.

- Data sourcing: Vendors must disclose how models are trained and whether copyrighted or sensitive data is included.

- Risk management: Teams should map AI tools to low, medium, or high risk and take steps accordingly.

In fact, PwC’s Global Compliance Survey 2025 found that 89% of executives are concerned about data privacy and security when adopting AI for compliance activities, a signal that governance and accountability are now central to vendor relationships.

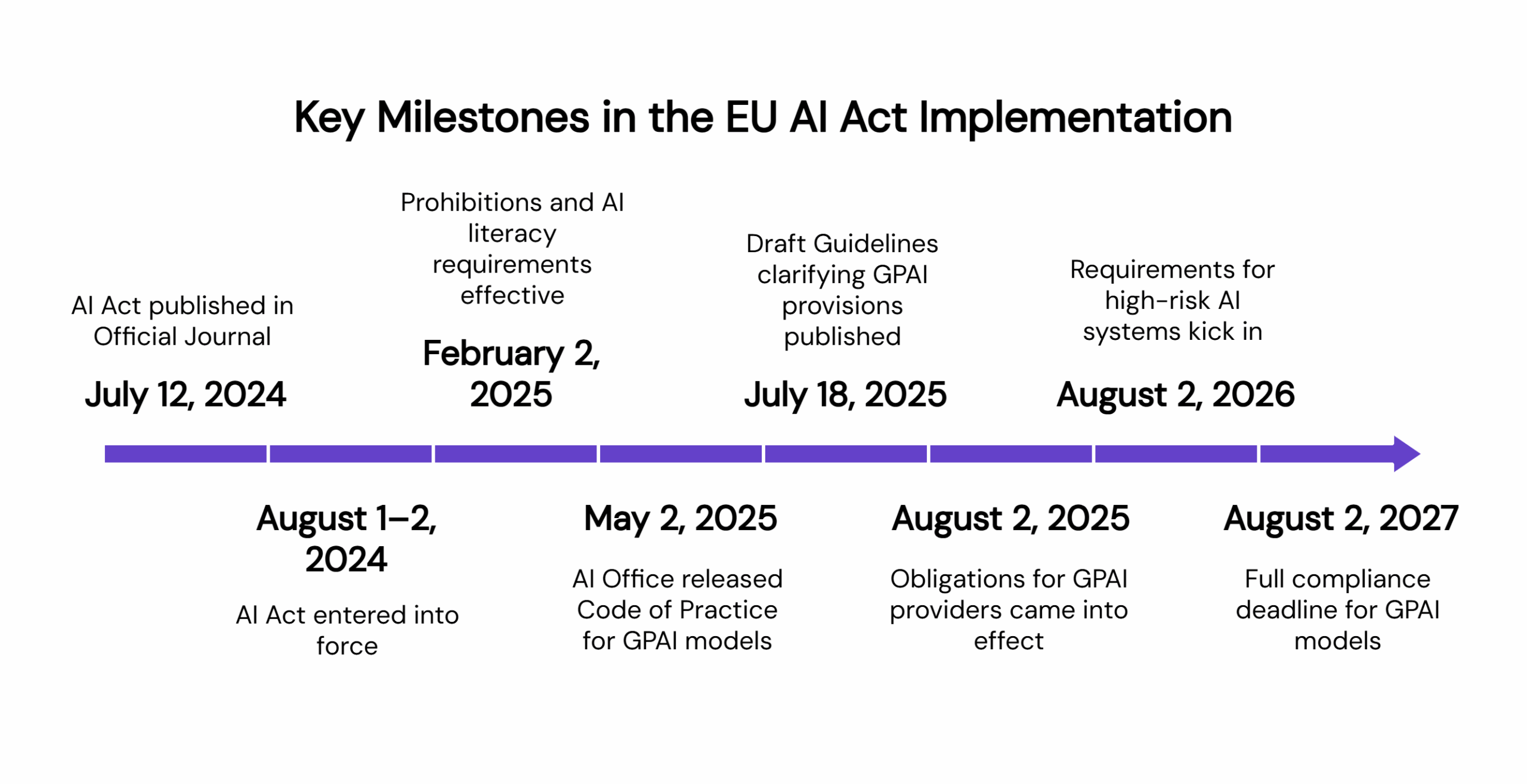

Key dates to remember: EU AI Act implementation timeline

- July 12, 2024

The AI Act was published in the Official Journal of the European Union, marking its formal adoption. - August 1–2, 2024

The Act entered into force on August 1, with its applicability formally commencing on August 2, 2024. - February 2, 2025

Prohibitions against ‘unacceptable-risk’ AI systems took effect. At the same time, AI literacy requirements became mandatory. - May 2, 2025

The European Commission’s AI Office released a Code of Practice for general-purpose AI (GPAI) models. - July 18, 2025

Draft Guidelines clarifying GPAI provisions were published, along with a Code of Practice framework that helps providers meet Act requirements. - August 2, 2025

Obligations for GPAI providers came into effect. Member States were also required to report on national competent authorities, designate those authorities, and share details publicly. - August 2, 2026

Requirements for high-risk AI systems, including conformity assessments, transparency, and post-market surveillance, will kick in. - August 2, 2027

Providers of GPAI models that were already on the market before August 2, 2025, must achieve full compliance.

Transparency in attendee-facing AI tools

Let’s say your event chatbot is answering FAQs about session timings. Under the Act, you’ll need to make it clear that it is AI-powered, not a human rep. The same goes for tools that summarize sessions or recommend networking matches.

The trick is doing this without ruining the experience. A short, well-placed line like “This assistant is AI-powered. For urgent queries, contact our help desk” keeps you compliant while still being user-friendly. Think of it as a seatbelt; you hope no one notices it during the drive, but everyone feels safer knowing it’s there.

Transparency also reassures sponsors. If attendees know how their data is being used, they are more likely to engage with sponsor activations without hesitation.

Lightweight vendor assessment checklist

Most event teams won’t build their own AI models. They’ll buy or license them. That means vendor selection is where most compliance risks live. Here’s a lightweight checklist you can apply in procurement conversations:

- Data sourcing clarity

Ask: Where does the training data come from? Is it licensed or scraped?

- Bias and fairness assurances

Ask: How does the vendor test for and correct bias?

- Alignment with GDPR and EU AI Act

Ask: Can you share compliance documentation?

- Auditability

Ask: Is there a reporting trail if something goes wrong?

- Human oversight

Ask: How is human review built into the tool?

Mapping these to risk categories is useful. A chatbot that answers simple event FAQs may be low risk, while an AI tool that automatically scores attendees for lead qualification is closer to high risk.

Balancing compliance with sponsor trust

Event marketing teams are under pressure to prove ROI. That often means offering sponsors more targeted activations and better attendee data. But with regulations tightening, sponsor trust hinges on showing you’re using AI responsibly.

According to Edelman’s 2024 Trust Barometer, 79% of people say businesses should explain how they use new technologies ethically. For sponsors, this isn’t just a PR issue, it’s a renewal issue. Being able to say “our AI tools are fully compliant with the EU AI Act” can make the difference between retaining or losing a major partner.

Preparing for 2026

The Act doesn’t stop at transparency. In 2026, the scope expands to high-risk systems, which may include personalization algorithms that profile attendees. That means more documentation, risk assessments, and potentially third-party audits.

Event leaders can prepare by:

- Training staff to handle compliance questions from attendees and sponsors.

- Including compliance obligations in all RFPs for event tech vendors.

- Building disclosure templates that can be reused across tools and activations.

Think of 2025 as practice laps. But by 2026, you’ll be expected to run the full race.

Most organizations are still figuring this out, as over 50% say they’re unclear on what the EU AI Act actually requires or how to categorize their AI use cases by risk. Which means your clarity and proactive strategy will set you apart.

Here’s a simple 5-point action plan to stay ahead:

- Audit your current use of AI in chatbots, summarizers, and personalization.

- Disclose clearly in attendee-facing tools.

- Vet vendors with the lightweight checklist above.

- Train staff on how to answer AI-related questions.

- Track compliance as a trust metric with sponsors.

The EU AI Act might feel like another regulatory hurdle, but in practice it’s a way to build credibility in a market where attendees and sponsors are increasingly skeptical of data use.

Lightweight AI Compliance Risk Map for Event Marketing Tools

- Chatbots (FAQs): Low risk

- Session Summarizers: Low–Medium risk

- Personalization Engines: Medium risk

- Lead Scoring Tools: Medium–High risk

- Attendee Profiling: High risk

Frequently Asked Questions: EU AI Act and Event Marketing

Q: What is the EU AI Act compliance requirement for event marketing?

A: The EU AI Act requires event teams to disclose when attendees are interacting with AI-powered tools, ensure responsible data sourcing, and assess vendors for compliance starting August 2025.

Q: Do all AI tools at events need compliance checks?

A: Yes. Even low-risk tools like chatbots or session summarizers must follow AI disclosure requirements. Higher-risk tools, like attendee profiling, will face stricter rules in 2026.

Q: What should I ask my vendors about AI compliance?

A: Use the AI vendor assessment checklist: data sourcing, bias mitigation, GDPR alignment, auditability, and human oversight.

Q: How does the EU AI Act affect sponsors?

A: Sponsors care about trust. Demonstrating AI compliance for CCOs and event leaders reassures sponsors that attendee data is managed responsibly.

Q: Is this only for EU-based events?

A: No. If you’re running events with EU attendees or sponsors, the Act applies, even if your company is headquartered elsewhere.

Q: Under the EU AI Act, does Bridged Media consider itself a provider, deployer, or both?

A: Bridged Media operates as both a provider and a deployer under the EU AI Act, depending on how the AI Agent is implemented:

- Provider: Bridged Media develops custom small language models and delivers an AI Agent platform built specifically for publishers and event marketing teams.

- Deployer: Bridged Media also integrates third-party models and client-owned internal models into workflows, ensuring compliance with EU AI Act regulations on deployment.

Q: Where does Bridged Media store the prompts from website visitors?

A: For AI data storage compliance, all visitor prompts are stored in secure, private cloud environments hosted on AWS by default. Customers can also choose to store prompts and related data on their own private servers or infrastructure, giving them full control over AI data sovereignty.

Q: How long does Bridged Media store website visitor prompts?

A: The default data retention period for AI prompts is 1 year. This policy is fully customizable, allowing clients to shorten or extend retention based on their internal AI compliance requirements or data governance policies.

Q: Does Bridged Media have a SOC 2 or other security program? Can you provide an independent auditor’s report?

A: Yes. Bridged Media has undergone multiple data security audits during a due diligence process with Informa. The company is pre-certified for SOC 2 and holds ISO 27001 certification. Independent auditor documentation and reports can be provided to demonstrate AI security compliance.

Q: What steps does Bridged Media take to mitigate bias or discriminatory outputs?

A: Bridged Media applies comprehensive AI bias mitigation strategies that include:

- Ongoing monitoring and regular audits to detect bias.

- Diversity checks to ensure datasets are representative.

- Documentation to improve AI model transparency.

- Human oversight (human-in-the-loop) for sensitive use cases.

These safeguards align with responsible AI practices and give customers direct control over bias management in AI systems.

Q: Will Bridged Media’s AI system share data with third parties (such as LLM providers)?

A: No. Bridged Media does not share client data with third-party AI providers or external entities. All data remains securely within the customer’s chosen cloud or private infrastructure. This ensures EU AI Act data compliance, complete confidentiality, and protection of client information.